On December 26th, 1983, the Soviet early-warning system for nuclear attacks reported a missile being launched from the United States. Shortly after, the system reported four more missiles underway. Stanislas Petrov, the sole officer on duty, was confronted with a monumental decision: Should he follow proper procedure and raise alarm, alerting the highest military officials and thereby quite possibly setting a retaliatory nuclear attack in motion?

Petrov, who only had minutes to make his decision, decided to classify the incident as a false alarm. He reasoned that an attack would most likely consist of hundreds of missiles, enough to render the Soviets incapable of hitting back. He further considered that the detection system was relatively new and might therefore be liable to make mistakes.

Petrov, who only had minutes to make his decision, decided to classify the incident as a false alarm. He reasoned that an attack would most likely consist of hundreds of missiles, enough to render the Soviets incapable of hitting back. He further considered that the detection system was relatively new and might therefore be liable to make mistakes.

Indeed: The warning system had picked up sunlight reflections from clouds and mistaken them for intercontinental missiles. Petrov was right, and he didn’t simply get lucky. Remaining clear-headed, he correctly noticed that the prior probability of a nuclear first strike looking like the evidence from the warning system was very low, and he fortunately also factored in that warning systems, even if they are big and fancy, tend to have a track-record of giving false alarms. Petrov’s rationality quite possibly saved hundreds of millions of lives that day by preventing a nuclear war.

Misconceptions: what rationality is not

Properly understood – rationality is always to a person’s advantage. It is important to note that according to this view of rationality, the stereotypical “rational character” in popular TV shows is not always rational.

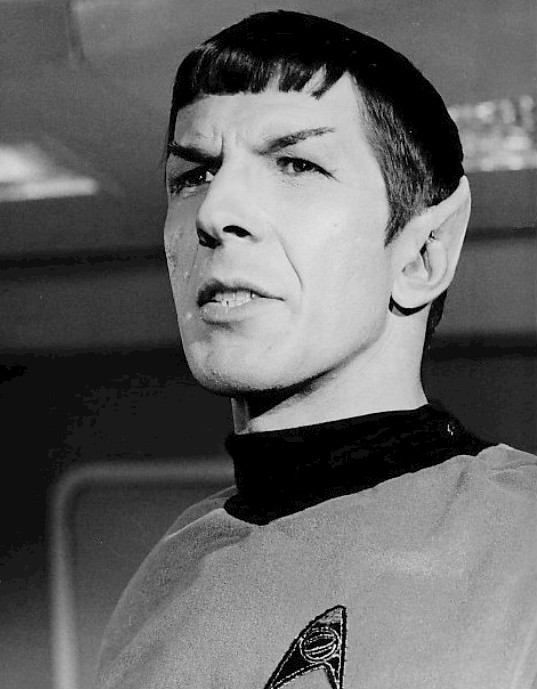

Consider Mr. Spock from Star Trek, who is judgmental, never relies on intuitions and whose emotional state is always the same: neutral. Or consider Sheldon Cooper from The Big Bang Theory, who admittedly becomes emotional quite often, but who is seemingly unable to make any decision without drawing a complicated chart.

Does being rational imply that we should constantly suppress all emotions, like Spock? Or that we’re not allowed to rely on intuitions or make decisions spontaneously? Fortunately, none of that follows. There is no general rule about how slowly or quickly rational decisions ought to be taken, or to what extent emotions are allowed to contribute to their outcome. It always depends on the specifics of the situation and, importantly, on the specific goal of a person.

Does being rational imply that we should constantly suppress all emotions, like Spock? Or that we’re not allowed to rely on intuitions or make decisions spontaneously? Fortunately, none of that follows. There is no general rule about how slowly or quickly rational decisions ought to be taken, or to what extent emotions are allowed to contribute to their outcome. It always depends on the specifics of the situation and, importantly, on the specific goal of a person.

With time and resources being limited, it can be quite bad for someone to spend too much time on a single decision. Similarly, emotions, although they certainly lead us astray sometimes, are often useful, and most if not all humans value them intrinsically as a central aspect of their ideal life.

Another misconception is that rationality must imply selfishness. When simplified models in economics or game theory assume that people are rational and choose the outcome that gives them the highest utility, “utility” is defined not as personal well-being, but rather as the sum of everything the person in question cares about – something that might perfectly well also include the well-being of others.

Rationality is for everyone

So, as a rational person, you are allowed to have emotions, be nice, use your intuitions and value non-quantifiable things like love and happiness. Indeed, you would probably be quite irrational if you were always cold, never trusted your intuitions and only cared about quantifiable things like the money in your bank account.

Rationality is goal-neutral, it’s about best achieving your goals (whatever they may be). By definition, everyone has an interest in becoming more rational, because everyone wants to achieve their goals as well as possible.

This is part I of a little series on the importance of rationality and applied rational decision-making.